Then, navigate to the file \bitsandbytes\cuda_setup\main.py and open it with your favorite text editor. Enter these commands one at a time: git clone Ĭonda install -c conda-forge cudatoolkit-devĭownload libbitsandbytes_cuda116.dll and put it in C:\Users\MYUSERNAME\miniconda3\envs\textgen\Lib\site-packages\bitsandbytes\ Navigate to the directory you want to put the Oobabooga folder in. GPTQ-for-LLaMA is the 4-bit quandization implementation for LLaMA. It's like AUTOMATIC1111's Stable Diffusion WebUI except it's for language instead of images.

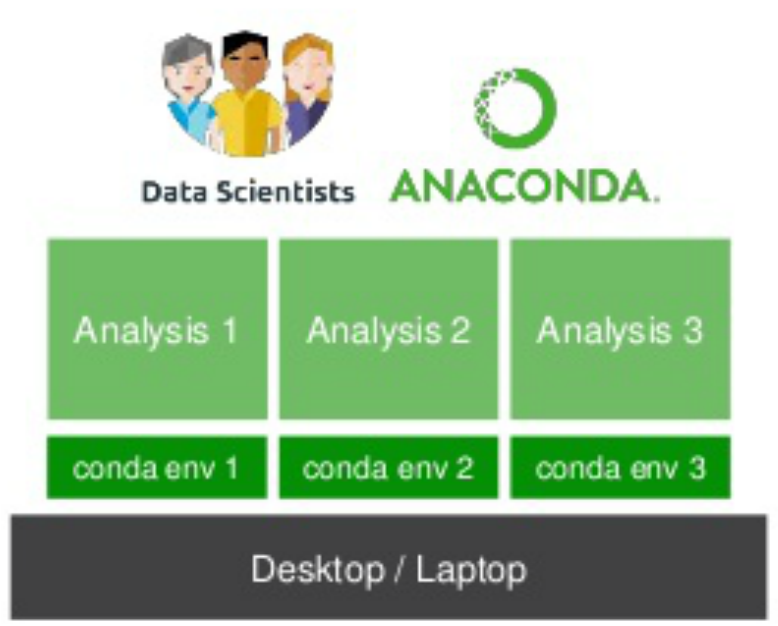

Oobabooga is a good UI to run your models with. If you get conda issues, you'll need to add conda to your PATH. It will take some time for the packages to download. Open the application Anaconda Prompt (miniconda3) and run these commands one at a time. Install Git if you don't already have it. In the Visual Studio Build Tools installer, check the Desktop development with C++ option and install:ĭownload and install miniconda. Install Prerequisites Build Tools for Visual Studio 2019ĭownload " 2019 Visual Studio and other products" (requires creating a Microsoft account). For Mac M1/M2, please look at these instructions instead. These instructions are for Windows & Linux. Here's some user-reported requirements for each model: Model Thanks to the efforts of many developers, we can now run 4-bit LLaMA on most consumer grade computers. Meta reports the 65B model is on-parr with Google's PaLM-540B in terms of performance.Ĥ-bit quantization is a technique for reducing the size of models so they can run on less powerful hardware.Meta reports that the LLaMA-13B model outperforms GPT-3 in most benchmarks.There are four different pre-trained LLaMA models, with 7B (billion), 13B, 30B, and 65B parameters.This means LLaMA is the most powerful language model available to the public. A troll later attempted to add the torrent magnet link to Meta's official LLaMA Github repo. On March 3rd, user ‘llamanon’ leaked Meta's LLaMA model on 4chan’s technology board /g/, enabling anybody to torrent it.

0 kommentar(er)

0 kommentar(er)